GoogLeNet / Inception Net : AI, as a scientific sphere, has expanded in recent years, and one of the principal achievements is the practice of deep learning. Machine learning particularly, Deep learning entails the use of artificial neural networks where the computer replicates how the human brain works to arrive at an arriving at a decision autonomously. While GoogLeNet which was developed by Google researchers in 2014 is one of the most advancement in deep learning of data. This architecture changed how CNNs were conceived from its depths, efficiency and performance point of view.

This extensive article covers the beginning history of GoogLeNet, including the preliminaries, the architecture of GoogLeNet, this and the features of GoogLeNet, its application and the effects as a breakthrough in deep learning. Now, I’d like to describe the success story of GoogLeNet and explain why it is still considered a reference model in compiuter vision.

The History of Convolutional Neural Network (CNN) –GoogLeNet / Inception Net

However, GoogLeNet is not the first to develop CNNs as this kind of neural networks has earlier been applied to image classification and recognition. However, the previous networks had several difficulties, for example, a high computational complexity, as well as the problems with overtraining. In order to appreciate what GoogLeNet is all about, we refresh our memory on the history of CNNs and the landmarks that defined it.

This chapter presents the subsequent development and improvement of Convolutional Neural Networks (CNNs). GoogLeNet / Inception Net

However, before the appearance of GoogLeNet there were other models based on CNNs that proved already to be very effective tool for image classification and recognition. But, the preceding networks had numerous drawbacks such as high computational costs and overfitting. The evolution of Convolutional Neural Networks and the landmarks that arrived at GoogLeNet are the steps that will help us in devising its importance.

GoogLeNet / Inception Net-The Early Days of CNNs: LeNet-5

The history of CNNs started from the late 1980 and early 1990s with Yann LeCun. He released LeNet-5, which is one of the first CNN structures and is aimed at handwritten digit identification, for example, at identifying zip codes on post Cards. LeNet-5 was a relatively simpleCNN and it is comprised of two convolutional layers with the typical pooling layers, full connection layers and then it has the output layer. However, very simple network LeNet-5 was the basis for further CNN models for a long time. GoogLeNet / Inception Net

GoogLeNet / Inception Net–The Breakthrough of AlexNet

The next breakthrough came in 2012 with Alex Krizhevsky’s and Ilya Sutskever together with Geoffrey Hinton’s AlexNet. Then, through a mammoth reduction in the error rates in the ImageNet competition, AlexNet helped the CNN become mainstream. Key innovations included:

ReLU Activation Function: Dropout, introduced in the sub-section jointly with ReLU (Rectified Linear Unit), also assisted here by preventing co-adaptation of features.

Dropout Regularization: It minimized overtraining by allowing a random exclusion of units while training was undergoing.

GPU Acceleration: AlexNet used GPUs for training, which was a major revolution in deep learning.

The Rise of VGGNet –GoogLeNet / Inception Net

In 2014, the Visual Geometry Group, which is based in Oxford, presented the idea of deeper networks with the use of small filters. VGGNet chose the stack of 3×3 convolution layers, and deploying the depth to 16 or 19 levels. However, what the VGGNet has gained in terms of accuracy has been at the expense of computational cost and an enormous number of parameters that limits it when it comes to real time use.

GoogLeNet or Inception Net: From the Basics –GoogLeNet / Inception Net

GoogLeNet, introduced in 2014 by Christian Szegedy of Google’s research team, is expounded in the paper captioned “Going Deeper with Convolutions”. GoogleNet can therefore be considered a new fundamental given that it led to very significant improvements in performance of the cores on the ly big benchmark of the ImageNet Large Scale Visual Recognition Challenge at which it obtained a top-5 error rate of just 6.67%. This was done with remarkable results that outperformed previous models although using smaller parameters.

The Concept Behind GoogLeNet–GoogLeNet / Inception Net

The chief aim of GoogLeNet was to advance the state-of-practice of CNNs while simultaneously adding depth and width without necessarily adding cost. The solution has been proposed here in the form of Inception module based on the idea of “Network-in-Network.” The concept was that a layer should be able to detect features of multiple size without requiring multiple layers because multiple filters to 1×1, 3×3, 5×5 are applied in parallel.

Important advancements of the inception module –GoogLeNet / Inception Net

The Inception module was designed to address two main challenges: –GoogLeNet / Inception Net

Computational Efficiency: Indeed, deep networks consume a lot of computational resources, and could therefore be a drawback from a practical viewpoint.

Effective Feature Extraction: Normal convolutional layers always have a fixed size filter for acceptance which is not so appropriate for featuring all sizes of images. GoogLeNet / Inception Net

The Inception module solves these challenges by: GoogLeNet / Inception Net

Using 1×1 Convolutions for Dimensionality Reduction: Before large filter, a 1×1 convolution is employed to reduce the input channel to save computational complexity.

Applying Multiple Filters Simultaneously: It organizes 1×1, 3×3, and 5×5 convection in parallel for two reasons; it lets the network capture very fine details and global context at the same time.

Adding a Max Pooling Layer: An additional max pooling layer in parallel is also considered for the detection of spatial hierarchies that makes the module least sensitive to spatial changes.

The Inception module is described with two or three parallel operations where the noisy inputs are passed through and the outputs of these parallel operations are concatenated to form the final output of this Inception layer and thereby enabling the network to learn the multi scale features efficiently.

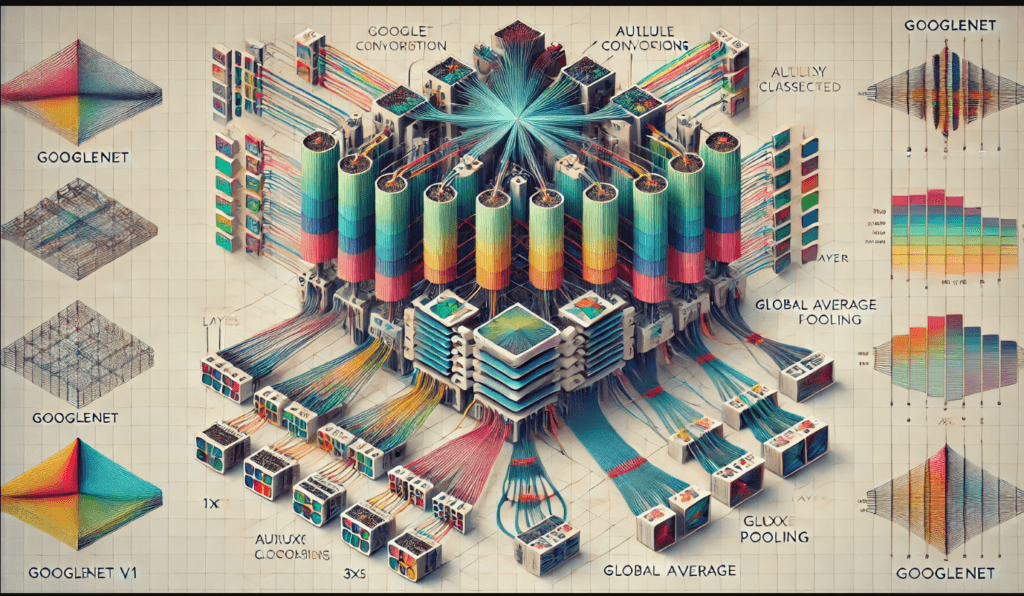

Brief Architecture of GoogLeNet –GoogLeNet / Inception Net

GoogLeNet is a 22 layer deep architecture if we count only the convolutional and the fully connected layers It has about 7 million parameters and here they are having only 60 million parameters. The architecture includes:

An initial set of standard convolutional and max pooling layers.: To form the initial set of standard convolutional and max pooling layers, the convolutional layer with three filters is used to follow the very first layer of fully connected layers.

One or more of the stacked Inception modules.

Two auxiliary classifiers inserted at middle layers to avoid gradient vanishing and added as regularization during training.

A last global average pooling layer, a fully connected layer and a softmax output layer for classification purpose.

Futher Analysis of the Inception Module GoogLeNet / Inception Net –GoogLeNet / Inception Net

The working of Inception module is explained in detail below but as a summary it can be said that the Inception module is the core or main layer of GoogLeNet which encompasses a capability to deal with the receptive fields with varying sizes within a layer. By doing so the network learns deep representation while at the same time is computationally efficient that is, it is very fast, very accurate This makes it very convenient to use.

Inception is the first module in the Bentley system, and this paper aims at analyzing the anatomy of the Inception module.

Each Inception module consists of four parallel branches:

1×1 Convolution: In dimensionality reduction and to make the computations more efficient.

3×3 Convolution: As used in capturing medium-scale features.

5×5 Convolution: For middle and small scale patterns also testing it can be used.

3×3 Max Pooling: For recording autochthonal sociospatial power relations.

The outputs of these branches are then concatenated along the channel dimension, thus forming an intricate feature map across various filter sizes.A very simple, yet efficient way to reduce the dimensionality is to apply 1×1 convolutional layers.

Among the changes in GoogLeNet one of the most effective was the usage of 1×1 convolutions for dimensionality reduction. The network introduced the 1×1 convolution before the 3×3 and 5×5 convolutions; this helped to decrease the parameters count making the training quicker and consumed less memory resources. Besides boosting the efficiency, this technique eliminates the problematic issue of overfitting.

Auxiliary Classifiers: Enhancing Gradient Flow –GoogLeNet / Inception Net

In GoogLeNet, to overcome the problem of vanishing graduate in deep networks auxiliary classifiers are introduced at some of the layers. These auxiliary classifiers are in fact the sub networks that exist on the main network and give extra feedback during training phase. They include only one or two convolutional layers, some fully connected layers and at last softmax classifier layer. Even though it does not contribute to the inference process or is used in the equations at inference time they enhance gradient flow during training which leads to superior convergence.

Training GoogLeNet: Strategies and Techniques –GoogLeNet / Inception Net

Training GoogLeNet which is deep network involves various steps such as data augmentation, choice of optimization and choice of hyperparameters. Now, let’s dive deep into some of the method employed to use GoogLeNet in an efficient manner.

Data Augmentation –GoogLeNet / Inception Net

There is nothing more important in deep learning than data augmentation, as it defines the model’s generalization. For GoogLeNet, the following techniques were commonly used:

Random Cropping: Random cropping areas from the input images adding variability to the procedure.

Flipping and Rotation: A process of increasing the diversity so applying random horizontal flips and rotations in the training set.

Color Jittering: Adjusting the images with elements entered by increasing and decreasing their brightness, contrast and saturation in order to increase model resistance to lighting.

Optimization Techniques –GoogLeNet / Inception Net

The procedure of GoogLeNet is carried out with the help of optimizer SGD with the presence of the momentum to accelerate a convergence. The learning rate is planned to gradually decrease in time from a higher value to a lower value in order to get closer to the convergence. This was also useful to the model to avoid being trapped in local minima and enhance general performance.

Regularization Methods –GoogLeNet / Inception Net

To prevent overfitting, GoogLeNet employs several regularization techniques:

Dropout: In fully connected layers dropout layer randomly sets out neurons during training in order to avoid over fitting.

Weight Decay: The modification to the cost function where an extra term is included to increase the penalty for selecting large weights in order to choose simpler models.

Label Smoothing: One approach that de-emphasizes the target labels so that the model is less overconfident about its predictions and therefore can generalize.

Real-life Implications of the GoogLeNet –GoogLeNet / Inception Net

The fact that GoogLeNet parameters allow high accuracy makes it useable in a broad number of applications. Now let us look into some of the most apparent cases where the GoogLeNet has been of much use.

Still, it is possible to divide the problem into image classification and recognition.

The accurate end-to-end labelling in the context of the competition fixed GoogLeNet as a leading model to execute standalone image classification tasks. It has been widely used in various industries for applications such as:

Medical Imaging: Identifying diseases through interpretation of X rays, MRIs’ and other diagnostic images.

Autonomous Vehicles: A real-time recognition and detection of objects and pedestrians on the road with a view of improving safety in the vehicles.

Retail and E-commerce: Optimizing search and recommendation within a product catalogue by analyzing the associated pictures.

Item Localization –GoogLeNet / Inception Net

Although GoogLeNet is a network that was primarily designed for classification it has been extended to the object detection tasks using tricks such as Region Proposal Networks (RPNs). This has applications in:

Surveillance Systems: Identifying and following objects in security cameras.

Agriculture: Health of crops and pest surveillance through the use of drones.

Transfer Learning –GoogLeNet / Inception Net

As it was pointed out in the previous sections, GoogLeNet architecture is very suitable for transfer learning where a model trained on one dataset is fine tuned on another dataset. This approach is widely used in industries where labelled data is rare; enabling models to learn from large knowledge databases such as ImageNet.

GoogLeNet’s story and future trends –GoogLeNet / Inception Net

The first revolution that GoogLeNet brought in the field of deep learning led the researchers to design highly enhanced Inception models of the series including Inception, v 3, v 4 and ResNet. These variants extend the basic architecture by addition of features such as batch normalization, residual connection, and factorized convolutions for further augmented accuracy.

This paper aims to explore the Evolution of the Inception Family.

It is worth mentioning that application of the Inception family is still high due to their combinations between accuracy and time complexity. Some notable advancements include:

Inception-v3: It is proposed since version 2015 and it enhances the Inception module by factorizing larger convolutions and by adding batch normalization.

Inception-v4: Which is geared towards adding depth with little or no compromise to computational time.

Inception-ResNet: Integrated Inception modules with residual connections so as to address the problems of shallow gradient flow in deep learning architectures.

The Future of CNNs –GoogLeNet / Inception Net

This victory set the stage for going even deeper in terms of designing architectures such as ResNet, DenseNet and EfficientNet that overcome omissions of conventional CNN Srivastava et al. (2015) . In the future perspective, the main effort is directed towards the improvement of models for edge devices, the reduction of latency time and model interpretability.

Conclusion –GoogLeNet / Inception Net

Architecture proposed by GoogLeNet known as Inception architecture was the inception of the new generation of deep learning networks that delivered state of the art performance with reasonable computational cost. This method, as a result of capturing multi-scales features and a relatively efficient parameter usage, has become a standard approach in the field of computer vision. The ideas and innovation behind GoogleNet may regarded widely used medical imaging technology today, self-driving cars, idea what is deep learning as an fundamental breakthrough.

GoogLeNet also called as Inception Net is one of the z major advancement in concept of deep learning and vision. First proposed by researchers at Google in 2014 it offered better measurement in terms of accuracy and time that was required for image classification.

To achieve better accuracy, GoogLeNet software architect incorporates Inception module to generate multiple convolution filters of different size in parallel thus giving the program accuracy in both the minor detail and the general context of the image. This multi-scale approach was particularly helpful to make the network to be deep and at the same time efficient at the same time without incurring in the high computational complexity that is characteristic of other deep networks such as VGGNet.

And one of the principal accomplishments of GoogLeNet was to get the best ILSVRC performance but using the number of parameters less than its predecessors. With help of techniques like 1×1 convolution for dimensionality reduction, auxiliary classifiers to avoid vanishing gradients issue, and global pooling to avoid overfitting GoogLeNet was a model of choice for many applications.

Unlike many other=namely computer vision= algorithms that are original resear ch findings and are used only in that context, GoogLeNet has had great appl ication in other fields for object recognition including medical imaging in the healthcare industry, self-driving cars in the automobile industry, an For the next versions of this model we have Inception-v3 and Inception-ResNet which are future developments in architecture that progress AI to this level.

Consequently, the proposal of GoogLeNet was a revolution in the CNN design moving it from the depth versus accuracy to depth versus efficiency setup. It remains an important architecture which has formed the basis for today’s modern neural networks and has shown that new inventive architectural solutions in the field can yield exceptional results not only in theoretical science but also in practical use. With new innovations continuing to come up in the ever-advancing field of deep learning, the tradition of GoogLeNet’s has remained a strong one moving to new horizons of developing solutions to enhanced visual recognition challenges.

2 thoughts on “GoogLeNet / Inception Net: Understanding the Historical Development of a Key Technology: CNN”