ResNet Architecture and Skip Connections: Revolutionizing Deep Learning

For decades, CNN has been viewed prominently as one of the cornerstones for addressing challenging visual recognition problems involving image categorization, detection, and boundary assignment. But as deep neural networks were developed they started to encounter various issues. One of the most important problems was ‘the vanishing gradient’ problem meaning that learning in deeper networks become more and more problematic as the depth of such networks increased.

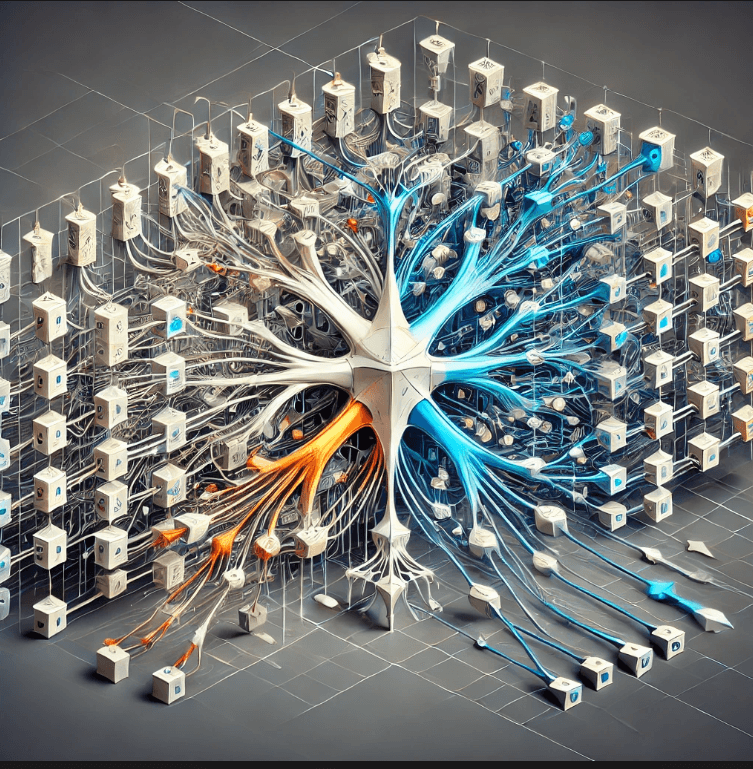

Enter ResNet – short for Residual Network –an entirely revolutionary architecture of deep learning networks. In ResNet there was another idea called – ‘skip connections’, or residual connections – this idea helped to train very deep nets without losing gradients. This advancement not only enhanced the performance but also created many other valuable advancements to deep learning technology.

Of course, in this article, we will expand on the ResNet architecture, shed light on the key development of skip connections and discuss the impact ResNet made in CNNs and deep learning in general.

Deep Networks and the Challenge –ResNet Architecture and Skip Connections:

It is necessary to consider the issues deep neural networks encountered before the appearance of ResNet in order to proceed with its detailed discussion.

In real neural networks, with the depth of layers being more, their gradients of the backpropagation process for weight updation began to decline. This became the vanishing gradient problem and made it very hard for the networks to learn from the data. In other words, the authors concluded that the deeper networks are, the more difficult it is to make the right changes to the weights for the model to perform well.

In the case of CNNs, what researchers found was that as networks became deeper, learning capacity was hampered. Though deeper networks are considered to perform better (since they can capture features of higher order), they also posed a problem when it comes to training models in an efficient manner.

The breakthrough of ResNet was the introduction of skip connections, a concept that allowed the gradient to flow more effectively through the network, even in very deep models. By bypassing one or more layers, skip connections ensured that the network would still be able to learn even as its depth increased.

What is ResNet? –ResNet Architecture and Skip Connections:

The ResNet architecture has received a great amount of attention when it was applied for winning the ILSVRC 2015 (ImageNet Large Scale Visual Recognition Challenge) with very high accuracy. This was done along with the ability to train extremely deep networks up to 152 layers in ResNet, which hadn’t been done with other models due to the vanishing gradient problem.

The Core Idea: Skip Connections

The major innovation of ResNet is that the model introduces skip connections or residual connections. These connections allow it to learn the residual functions with respect to the layer inputs in contrast to learning the unreferenced mapping directly. That is, instead of obtaining the output of the network or the next layer as its input, the network identifies the differential input-output or residual information. The origin of this idea came from the fact that networks that are deeper ought to learn residual mappings rather than direct mappings. It also helps the deeper layers to train more easily because corrections for mistakes of the previous layers are only allowed.

How Skip Connections Work-ResNet Architecture and Skip Connections:

A common skip connection is the identity connection, where a shortcut is formed to directly connect one layer’s input with its output layer, without passing through other layer in the loop. Vertically, formally it is defined for a given layer lll,

yl = F(xl,{Wl})+xly_l = F(x_l: \{W_l\}) + x_l

Where:

In other words, the input to the layer is represented by the option xlx_lxl.

F(xl,{Wl}) : F(x_l, \{W_l\}) : It denotes the operation, which the layer performs on the input may involve convolution, activation or pooling.

This is the output of yly_lyl layer which is a parameter of the layer.

The residual mapping is done by appending the transformed output with a given label ‘xlx_lxl’.

This addition is necessary for the skip connection tradition where deep features can be retained by the model without degrading as a result of increased depth of the network.

Types of ResNet Architectures -ResNet Architecture and Skip Connections:

Based on the number of layers, ResNet belongs to one of the categories of the network as described below. The most common ones are:

ResNet-18: A ResNet architecture with a moderate depth of 18 layers more appropriate for not so complex tasks.

ResNet-34: It has 34 layers for the shallow version and commonly used for ordinary tasks.

ResNet-50: There’s a version with 50 layers , the advanced one. This version employs bottleneck layers and can be stated that it is perhaps the most widespread of all versions in numerous most real-life applications.

ResNet-101: This version has 101 layers and serves for deep learning with greateramount of data in comparison to the initial version.

ResNet-152: The most profound ResNet of 152 layers for highly sophisticated tasks which necessitate high precision.

It has been seen that deeper variants of ResNet have higher computational complexity and yet the proposed architecture has shown a possibility to attain near-optimal accuracy in a number of applications, especially in the image classification and the object recognition.

The Anatomy of ResNet –ResNet Architecture and Skip Connections:

Convolutional Blocks

Nevertheless, at its very base, ResNet is made up of basic conventional layers just like those of normal CNNs. These blocks work to do convolution on the image to yield the features of the input image.

However, the difference between ResNet is how these convolutional layers are connected. Instead of building stacks of non-linear operations, the output from one block is summed with the input hence allow the model to learn residual mappings.

Bottleneck Architecture

In deeper versions of ResNet (like ResNet-50, ResNet-101, and ResNet-152), a bottleneck design is used to reduce the computational complexity and improve training efficiency. bottleneck block consists of 3 layers:

1×1 convolution: Reduces the number of features in the entire input.

3×3 convolution: Serves to deliver the major function of feature extraction.

1×1 convolution: Removes the reduction of dimensionality of output the same as input.

This design assists ResNet to scale to very deep networks at largely a few parameters and cuts the computational cost flexibly.

Identity mapping and Downsampling DOWN-SAMPLING” –ResNet Architecture and Skip Connections:

However, since in most deeper network designs any and or bn input and output vary in their dimensions, downsampling operation is accomplished in order to make the dimensions of the inputs and outputs similar to each other before the actual addition is done. This is usmally accomplished with a convolutional layer with a stride of 2 for down sampling to reduce the spatial dimension of the feature map.

Advantages of ResNet –ResNet Architecture and Skip Connections:

Solving the Vanishing Gradient Problem –ResNet Architecture and Skip Connections

In this paper, we identify ResNet’s skip connections with the following specific benefits: the problems which skip connections solve: ResNet’s skip connections prevent the vanishing gradient problem. Through the flow of gradients directly through the skip connections, backpropagation turns out to be less volatile even in As deep networks. This makes it possible to train networks that are more than four previous architectures deep.

Enhanced Performance –ResNet Architecture and Skip Connections:

Many times deep networks perform well since they capture more subtle features and representation. Instead of loosing accuracy, ResNet with its shorter connection known as skip connections can be trained deeper and thus is very relevant in higher level vision problems such as image classification, object detection and facial recognition.

Efficient Training –ResNet Architecture and Skip Connections:

This is beneficial for each layer so that during training, the model is easier to converge compared to using standard connections. Thus, the ResNet networks improve the speed of the training process and fewer iterations require them to achieve the best result when compared to basic deep networks.

Generalization –ResNet Architecture and Skip Connections:

As a result of this residual learning, the networks fully generalize better at unseen data. As a result of learning residuals, ResNet minimises the probability of the model being over-fit to the training data.

Applications of ResNet –ResNet Architecture and Skip Connections:

The innovations brought by ResNet have been applied to many aspects of deep learning with higher respective to cases that rely on vision recognition.

Image Classification –ResNet Architecture and Skip Connections:

ResNet has introduced new high-quality record in image classification tasks. With the help of deep layers ResNet models deliver greater precision in earlier experiments carried out with online datasets like ImageNet or CIFAR-10, where the model is to categorize images into particular types.

in Object Detection of several objects and Segmentation of certain objects. –ResNet Architecture and Skip Connections:

ResNet Architecture and Skip Connections And because of its high accuracy in image classification, ResNet has been extended to be applied to object detection and segmentation. In these tasks ResNet’s extraction of fine details makes it possible to be used in other applications such as the identification of objects in images and their subsequent segmentation.

Medical Imaging–ResNet Architecture and Skip Connections:

In medical applications ResNet has been applied in use cases such as diagnosing diseases on scanned images be it CT-scans or X-rays. Its deep architecture is well-defined for intricate nuance patterns and needs in medical-images for developing better diagnostic tools and techniques.

Autonomous Driving –ResNet Architecture and Skip Connections:

The same ResNet-based models are also applied also in the autonomous driving, as identification of objects like pedestrians, other vehicles, signs and lanes on the road is important for safe operation of a car.

ResNet Architecture and its Features –ResNet Architecture and Skip Connections:

To fully grasp the importance of ResNet, it further important to understand the vanish gradient issues in standard neural networks. When deepening of neural networks, gradients which are used in backpropagation step-the process weight training is reduces exponentially. This occurs because gradients pass through layers in a backward procedure, and in the early layers, they are minuscule to produce necessary weight alteration.

In a very deep network, this corresponds to the fact that weights in the early layer are almost not updated at all during the training process. This may lead to the model’s performance degradation, or at worst — it stops learning at all. ResNet considers this problem by using residual learning to make gradients pass through skip connections rather than all the layers and hence reducing the vanishing gradient effect.

These inclusions involve what the author terms residual learning and identity mapping.–ResNet Architecture and Skip Connections:

There are four fundamental issues in ResNet which include but not limited to; Residual Learning. This becomes even more absent in traditional neural networks where a block of layers may try to learn the direct mapping from input xxx to output yyy. However in ResNet, it is expected that the network learns a residual function f(x;θ) = y – x.

y=F(x)+xy = F(x) + xy=F(x)+x

Where F(x)F(x)F(x) stands for successfully learned mapping of the input. In this way the model is learn the residual or the delta that it needs in order to produce the right output from the input. If the residual is zero the output equals the input, which is an identity mapping of the kind shown in the figure above.

Such an approach is possible since the given approach allows the network to improve upon its output rather than learning the entire transformation map anew. In practice it implies that ResNet can scale better with depth since every additional layer learns only a residual transformation instead of an outright transformation.

Building ResNet Blocks –ResNet Architecture and Skip Connections:

In the ResNet architecture residual blocks are used in the building of the architecture. A simplified residual block has two or more convolutional layers and has a shortcut connection that adds the input to the output. The response of the skip connection is then passed through an activation function mostly ReLU and the final out put is fed to the other block.

Block structure: –ResNet Architecture and Skip Connections:

Convolution Layer: Applies a filter on the input and obtain features from the output.

ReLU Activation: Introduces non-linearity to the model: allows it to capture more detailed patterns it wouldn’t be able to before.

Skip Connection:

This looks simple but what they do is add the input signal directly to the output without passing through the convolutions.

In ResNet-50 or ResNet-152 and other denser networks, those stacked blocks contains the added intermediate layers called bottleneck layers for efficiency and performance. The bottleneck design decreases the number of parameters and operations, through the utilization of a sequence of 1×1 convolutional layers that interpolate between the three-dimensional volumes between two 3×3 convolutional layers.

Bottleneck Blocks as an Element and Its Function –ResNet Architecture and Skip Connections:

To describe it briefly, the bottleneck design directly contributing to the reduction of the computational complexity is applied to deeper ResNet models such as ResNet-50, 101, and 152.

A bottleneck block is a three-layer structure that operates as follows: –ResNet Architecture and Skip Connections:

1×1 Convolution: Reduces the amount of data in a set of feature channels which can be equated with dimensionality reduction.

3×3 Convolution: Located here is the main transform of the input.

1×1 Convolution: Restores the feature channels to the original sizes. This design also aids in the minimization of the parameters which, leads to faster training and decreasing of computational complexity without a decrease in performance. Nonetheless, even though the dimensionality was reduced during the first 1×1 convolution, the model retains the ability of the residual network for learning

Skip Connections: More Than Just Shallow Layers –ResNet Architecture and Skip Connections:

The skip connections of ResNet are one of its most influential attributes because they help the network learn faster even when the network reaches its deepest. Dilation connections can also help avoid overfitting, bypassing features from earlier layers through the net, but without having to through each of the convolutional blocks. They also propose a form of regularization by making it impossible for the network to have to put reliance of learned features at each depth.

Shortening the Path for Gradients –ResNet Architecture and Skip Connections:

In very deep networks, training becomes difficult because gradients have to pass through many layers, causing them to diminish or “vanish” by the time they reach the earlier layers. With skip connections, the gradients can flow directly through the shortcut paths, bypassing several layers, which helps maintain their strength and stability. This results in more stable and faster convergence during training, allowing the network to learn more effectively, even with hundreds or even thousands of layers.

Improving the Optimization Process –ResNet Architecture and Skip Connections:

Since skip connections help gradients flow more efficiently, they also make the optimization process easier. The network can learn to adjust its parameters without worrying about the vanishing gradient problem, leading to faster convergence and the ability to train much deeper models. This has proven to be particularly useful when working with large datasets, as deeper models tend to capture more intricate patterns and generalize better to new data.

Conclusion

ResNet introduced an important step in the evolution of deep neural networks, due to its novel structure based on skip connections. These relations dealt with the issues introduced by extremely deep networks and enabled them to train sufficiently and to achieve high performance on many tasks.

By scaling the size of the network, ResNet has pushed not only the state of the art in image classification and computer vision, but has also been a driving force for the architecture of the following architectures (eg, DenseNet and the rest of the family of deep convolutional neural networks). ResNet remains one of the most widely used DL architectures in the contemporary period and also motivated the next generation of AI and computer vision applications.

ResNet has ushered a paradigm change in the world of neural network design and its effects will continue to be even felt in designing the next generation model for years to come. ResNet with its deep architecture (and powerful skip connections) has always been at the heart of the ongoing evolution of artificial intelligence, namely image classification, medical diagnosis or autonomous driving.

1 thought on “ResNet Architecture and Skip Connections: Revolutionizing Deep Learning”