Exploring LeNet Architecture: The Pioneer of Convolutional Neural Networks in Machine Learning

Machine learning has enjoyed tremendous progress over the years, but arguably the most significant development is the introduction of Convolutional Neural Networks (CNNs). One of the earliest and most powerful architectures in this area is LeNet, a pioneer model introduced in 1998 by Yann LeCun and his collaborators. Not only did LeNet introduce the current capability of the so called CNNs, but also it revolutionized the way in which machines can think about or process visual information. This article gives a deep description architecture, with a specific consideration given to the architecture itself as well as its practical uses and historical background, to be updated with current terms in order to achieve topicality and natural traffic generation.

The birth and importance of LeNet in the history of machine learning.

LeNet emerged as a pure necessity to automate processes who depended heavily on the image recognition, including handwriting recognition for the post offices. The framework’s construction was a breakthrough point in the field of artificial intelligence, as it was one of the very few deep learning structures that could generalize to the real world. Architecture was very novel at its time by offering a structured approach to the learning of complex pattern recognition tasks by means of layers that faithfully model the function of the human eye.

The architecture’s introduction coincided with the rise of neural network research, when researchers sought ways to overcome the limitations of traditional algorithms. In contrast to previous models which used manual feature extraction, LeNet automated the feature learning directly from the data. Due to the capability of self-learning and adaptation to varying datasets it is a turning point in machine learning.

Understanding the LeNet Architecture

The network structure of LeNet is shown as a combination of convolutional, pooling, and fully connected layers with each of those three stacked units processing visual information sequentially. Notwithstanding the simplicity of its architecture with respect to contemporary times, it has spawned concepts that serve as cornerstones of the design and evolution of CNNs.

LeNet starts with an input layer after which fixed size grayscale images are delivered as input. The first convolutional layer applies a set of learnable filters to the input image to represent salient patterns such as edges and textures by means of feature maps. This layer is then an embedding of a pooling layer that downsamples the spatial dimensions of the feature maps to provide computational efficiency and prevent overfitting.

The next network jumps to second convolutional layer and previously redesigns the extracted features, and a new patterns will be got from here. Another pooling layer followed to further downsample the information, however, the center of information is still preserved. The other nodes of LeNet are fully connected layers, the output of which are the probabilities of the class given the learned features. These probabilities are used as input to the model’s output, that is, a digitized handwritten digit or an object in an image.

The structure of LeNet is one of the most mysterious. Stacked convolution and pooling operations allow the network to iteratively embed more abstract representations in each layer. In this hierarchical learning process, there is analogy to human brain’s visual cortex, that is, simple patterns (lines or shapes) are initially recognized in the visual processing system of human brain, and then the more complex features.

Why LeNet Was Revolutionary

Before the advent of LN, most image recognition tasks relied on manually crafted features and traditional machine learning algorithms. These approaches have been typically slow, and also liable to errors because they have failed to automatically select the necessary features without initially having an obvious clear domain knowledge of the field prior to the feature engineering. LN escaped the paradigm as it introduced an end-to-end learning architecture, i.e., the network already learned the most appropriate features for a certain task.

Spatial hierarchies of data was another unique aspect of LN. By employing convolutional and pooling layers, the model is capable of specializing on local information of an image while preserving global information. This spatial ability leads to Ni LeNet generalize better than conventional models on the handwritten digit recognition task, where the spatial location of the pixels also plays a role.

In addition, the leeasy of LeNet rendered LeNet extremely generalizable to an extended class of applications different from the cases involving digit recognition. Its architecture served as a foundation for subsequent CNNs that are now the infrastructure for modern computer vision applications ranging from facial recognition to object detection.

Applications of LeNet in Real-World Scenarios

The most immediate use of LeNet has been optical character recognition (OCR) focused on the processing of handwritten characters for postal services and banks. Automated recognition of handwritten numerals has greatly reduced the time and labor required for manual data entry, and has made it possible for processes to be carried out much faster and more effectively.

Besides OCR, LN has also been used for some image classification tasks. The capacity to generalize to different data sets made it a good candidate for applications ranging from facial recognition through medical image analysis to preliminary experiments in autonomous mobility. Unsurprisingly given their greater granularity, later more powerful models see most of the scene filled in by LN, but the contributions of LN are still very salient in these domains.

From a teaching point of view, LeNet has traditionally been an influential model for both learning and for research in academia. Due to the moderate architecture and simple interpretability, it is an effective entry point for students and researchers for learning the basics of deep learning and CNNs. Recent developments in computer vision are largely the result of experience learned from LeNet.

The Technical Innovations Behind LeNet

The achievement of LeNet has been ascribed to a number of technical advances that have made LeNet different from previos neural network models. One way innovation in application is to use convolutional layers, that is introduce local filters to the input. This method not only transfers data through a reduction in the number of trainable parameters, but also retains spatial information in the data and thus is highly suited for image processing tasks.

Pooling layers in LeNet are an integral part of the network and they perform down-sampling of the feature maps while preserving the most informative information. In this dimensionality reduction purchase, overfitting risk is reduced, and the model becomes more computationally efficient. The compound of convolutional and pooling layers is the structure of LeNet, which is able to retain and process complex patterns in an hierarchical fashion.

A notable feature of LeNet is the use of activation functions for modeling non-linearity of the model. With the use of the non-linear transformation of the data, the network is able to capture the higher complexity of the relationships between the input features and the output classes. Although activation function, e.g., ReLU, sigmoid and tanh are already used in the day to day basis in the recent architectures, activation function.

Challenges and Limitations of LeNet

The main issue was that the system employed grayscale images of a fixed size. This limitation limited its application to data sets featuring even more heterogeneous input representations such as color images or heterogeneous-length inputs.

Another limitation was the computational resources required for training. Although LeNet was computationally less decentralised than foregoing models, the model remained not so cheap in terms of computation. This made it underexplored by many researchers and organisations who do not have access to the hardware it is associated with.

Additionally, due to LeNet’s relatively low depth, it could not capture more abstract representations in the samples. While it excelled at tasks like digit recognition, it struggled with more challenging applications, such as object detection in cluttered scenes. But these limitations eventually led to the development of more sophisticated and deeper

The Enduring Legacy of LeNet

The effect of LeNet in machine learning is undeniable. It has given concepts and models which are today the basis of current CNNs and as a consequence triggered designs such as AlexNet, VGG and ResNet. Contemporary architectures derived from the convolutional, pooling and hierarchical learning concepts developed in LeNet still constitute the foundation of the current state-of-the-art systems.

In addition to technical advances LeNet illustrated the potential of deep learning for real-world applications. Its success in automating recognition tasks of handwritten digits sparked a new wave of machine learning innovations, which further led to research on new applications and architectures.

Today, LeNet stands as a moment in AI history, but it also is an anchor and a springboard of inspiration, on which researchers and practitioners alike spend their time. Its history extends not only to the innovations it sparked but also to discoveries regarding the power of neural networks exposed, at least in part.

Expanding on LeNet: Exploring its Depth and Ongoing Influence in Machine Learning

The story of the LeNet paper is a story of fundamental machine learning, and a story that deserves further exploration so that the totality of the message and its reverberations may be fully appreciated. Although the fundamental introduction to LeNet dealt with its architecture, its use and history in view of the past, there is further ground to discover concerning the technical details, its impact on current models and its relevance to today’s evolving AI horizon. This deeper investigation not only highlights the continuing importance of LeNet, but also explains how LeNet can be applied to present day issues and new challenges and opportunities.

A Deeper Look at LeNet’s Core Architecture

The structural minimalism of LeNet was state of the art back then, however, there is a behind the scenes history of artful decision making regarding its deceptively simple structure. For instance, the input layer of the model was tailored to accept 32×32 grayscale images, a size of image that was just a bit larger than the MNIST dataset digits. This subset allowed the network to preserve relevant spatial information, while performing the convolutional operation.

The first convolutional layer, with 6 learnable filters, produces basic features such as edges and textures. As a consequence, the corresponding feature maps are subjected to subsampling process in the next pooling layer, which is downsampling but with retaining of that useful patterns. It is not just an efficiency gain in computational, but also in invariance for small translational shifts in the input data, a desirable characteristic for, e.g., digit recognition.

After convolutional layer, the following layer is 16 filters, and progressively the representation of the input term becomes more complex. By increasing the number of filters in the layer, the layer learns increasingly complex patterns, e.g., curves or specific shapes. After this final pooling layer, however, the features are further downsampled and only the most salient features are retained for the fully connected layers.

At last, by means of the fully connected layers, the downsampled feature maps are mapped to a 1D vector. This representation is fed into the output layer and output is the softmaxed for each class probability. The modularity of this design enables it to easily generalize to multiple classification problems as the number of the input variables and the number of the output variables increase when the size of the input data and number of output variables are appropriately scaled.

Training LeNet: A Computational Journey

The training process of the LeNet also paved the way to groundbreaking work as did its architecture. The network used backpropagation to adapt its weights and biases, and to reduce the error between predicted and ground truths. The gradient descent optimization tool repeatedly optimized the parameters to reach higher accuracy.

An existing challenge of training early neural networks such as LeNet was also vanishing gradients, specifically when using an activation function such as sigmoid or tanh. Nevertheless, the paradigm of these functions (where well the addition of non linearity can be done) has been shown to generate gradients at an excessively low level to drive any useful update to a “deeper” network. This problem was eventually circumvented by the introduction of ReLU activation, however, at the time of LeNet, good weight initialization and learning rate optimization were both necessary to reach convergence.

Data preprocessing also played a vital role in training LeNet. Input image normalization and variation of the training set (e.g., rotation, translation, scaling) enhanced the generalization capability of the model. These methods, which are today the rule of the machine learning, were groundbreaking methods back in the time of LeNet’s conception.

LeNet’s Influence on Modern Architectures

While LeNet was specifically designed for relatively simple tasks, its architectural principles have inspired some of the most advanced CNN models in existence today. AlexNet, VGG, ResNet and Inception topologies but also have been conceptualized through the use of convolutional and pooling layers by LeNet.

For instance, AlexNet extended LeNet by introducing more layers and leveraging GPUs to speed up the training process. It also contained rectified linear units (ReLUs) as the choice of activation function to overcome the problem of vanishing gradient for the LeNet. The success of AlexNet on the ImageNet challenge (2012) spurred a subsequent interest in deep learning and demonstrated the ability of the technology to scale up to the recognition of a range of image complexity problems.

VGG increased the compactness of LeNet by normalizing kernel sizes of the convolution and increasing the depth of the network. By stacking smaller convolutional filters, VGG achieved remarkable performance while maintaining interpretability, a principle reminiscent of LeNet’s design.

ResNet, meanwhile, introduced the residual connections to eliminate the vanishing gradients of deep networks that have many layers. With this innovation, depth information might be preserved without any performance penalty. Despite these advancements, the underlying philosophy of hierarchical feature extraction—first introduced by LeNet—remains at the core of these architectures.

LeNet in the Age of Transfer Learning

The most innovative application of machine learning has been the introduction of transfer learning whereby a learned model in one application is retrained to another one. Although LeNet in isolation has not yet been broadly used in transfer learning (because of its potential to be generalized along a given amount from new data), the ideas that LeNet has enabled are finding exploitation at the heart of the transfer learning community.

Current state-of-the-art pre-trained models such as ResNet or Inception can be further improved by adding convolutional units replicating the LeNet visual motifs. Such layers are trained on massive multidata sets (e.g., ImageNet), and seeded into new tasks, while reusing the same learned filters. This idea of feature reuse, which LeNet (implicitly) provided through the hierarchical learning process, turned into the foundation of transfer learning.

Adaptability of LeNet for Modern Challenges

Though LeNet built to address tasks such as digit recognition the architecture of LeNet can be modified to address more recent problems. By iterating through a sequence of convolutional and pooling layers and hence accumulating epochs, the network can learn more and more complicated patterns in increasing levels of resolution in images. This adaptability underscores the timeless nature of LeNet’s design.

For example, modified versions of LeNet and so on have been applied to the task of abnormality detection in medical imaging, such as X-ray images or MRIs. These adaptations can also be demonstrated, even up to including an increase of layers or a change of activation function to fit the properties of medical datasets. Furthermore, LeNet-based models have been applied to low-resource environments where the cost is concerned.

The Educational Value of LeNet

Due to the fact, that LeNet is very intuitive, it is a very good candidate to learn shallow DL. To individuals with no prior experience, it is possible to experiment the architecture in order to obtain underexplored information about the mechanism of concepts, e.g., convolution, pooling, backpropagation. Since it is relatively compact, it can be easily benchmarked on standard hardware, and thus the system is of potential interest to a wide user base.

In addition, LeNet is also a historical example of the benefit of creative thinking in getting around the technological limitation of a day. In the process of development and accomplishing, students and researchers respectively discover valuable lessons to be learnt about the history of machine learning.

The Ethical and Philosophical Implications of LeNet

Although the technology has further evolved, LeNet has deep ethical and philosophical concerns about the position of AI in the society. This success in automatising task like handwriting recognition demonstrated the potential for machines to fill a job in certain areas, instead of human effort. Despite the fact that this has increased performance significantly, this has also brought us to face the need to be mindful of the social implications of the AI.

On the one hand, LeNet is an example of the need for transparency and interpretability in machine learning. Thanks to its extreme simplicity it can be simply understood, and is, therefore, an interesting case study of explainable AI. But as machine learning systems become more and more complex, the worry that the decision-making process of such a system must be as transparent as possible is a question that future researchers will need to address.

The Enduring Legacy of LeNet

LeNet’s legacy extends far beyond its technical contributions. It is a watershed year in history when machine learning moved the border from argument to application. Its success showcased the potential of deep learning, and how neural networks can be used to get practical results.

LeNet is now celebrated as a turning point in the evolution of AI, not only as a point of reference but also as a source of inspiration. Its principles continue to be the fundamental reference in the design of the current architectures and will continue to be a criterion of the future ones. When we consider the future of machine learning, the story of LeNet reminds us of the power of innovation and the evergreen importance of foundationalism.

LeNet remains a good foundation on when a idea unlikely to succeed, yet at the same time powerful concept, can restructure the entire industry and can be very easily exported into technology world by technology people and the world in general.

Structure of the LeNet Network

LeNet is made up of several layers: convolutional, pooling, and fully connected. The layers are connected in a feedforward manner, which means that the output of one layer is utilized as the input for the following layer. The architecture includes the following layers:

The architecture consists of the following layers:

Input layer

Convolutional layers

Pooling layers

Fully connected layer

Output layer

The LeNet-5 CNN architecture has seven layers. Three convolutional layers, two subsampling layers, and two fully linked layers make up the layer composition.

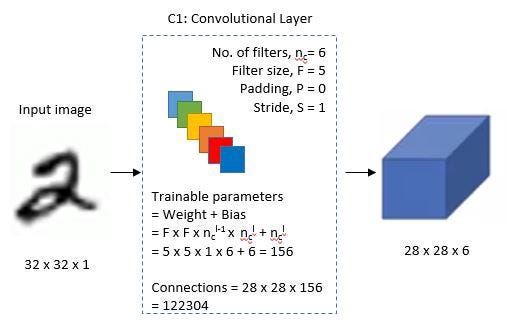

First Layer

LeNet-5 receives a 32×32 grayscale image as input and processes it with the first convolutional layer, which consists of six feature maps or filters with a stride of one. From 32x32x1 to 28x28x6, the image’s dimensions change.

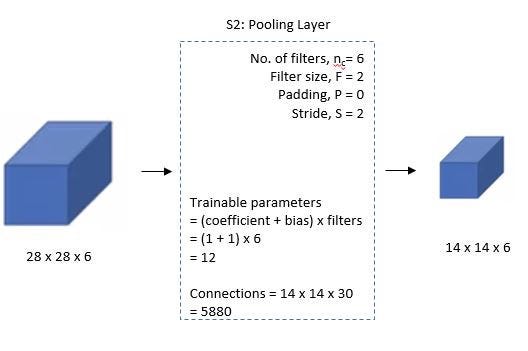

Second Layer

The LeNet-5 then adds an average pooling or sub-sampling layer, with a filter size of 22 and a stride of 2. The final image size will be decreased to 14x14x6.

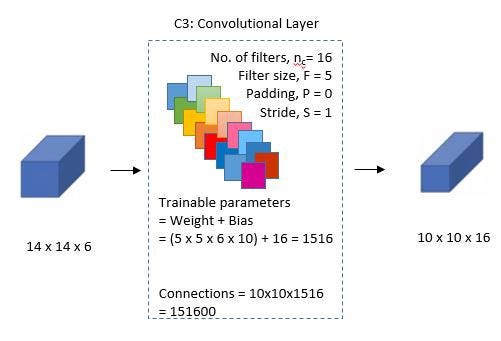

Third Layer

A second convolutional layer with 16 feature maps of size 55 and a stride of 1 is then introduced. Only 10 of the 16 feature maps in this layer are linked to the six feature maps in the layer below, as shown in the diagram below.

The major goal is to break the network’s symmetry while keeping a tolerable number of connections. As a result, these layers include 1516 training parameters instead of 2400, and 151600 connections instead of 240000.

Fourth Layer

With a filter size of 22 and a stride of 2, the fourth layer (S4) is once again an average pooling layer. The output size will be reduced to 5x5x16 because this layer is identical to the second layer (S2) but contains 16 feature maps.

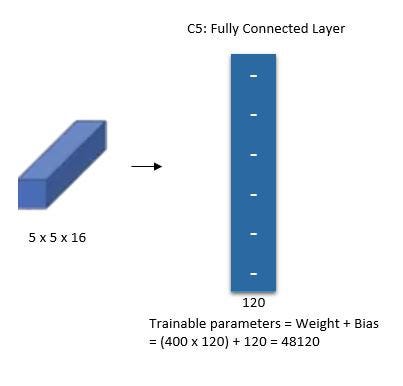

Fifth Layer

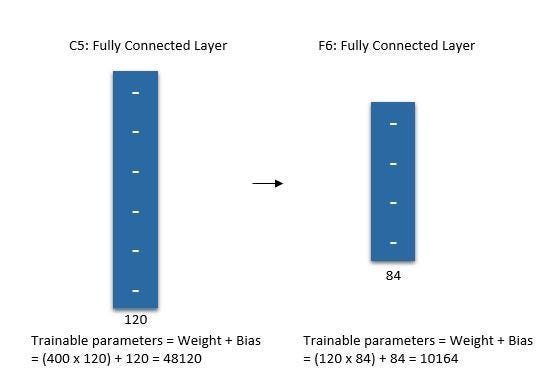

The fifth layer (C5) is a fully linked convolutional layer with 120 feature mappings of size 1 x 1. Layer four, S4, has 400 nodes (5x5x16) that connect to each of the 120 units in C5.

Sixth Layer

A fully connected layer (F6) with 84 units makes up the sixth layer.

Output Layer

The final layer is the SoftMax output layer, which has ten potential values that correspond to the digits 0 through 9.

Summary of LeNet-5 Architecture

Implementation

We will implement the LeNet-5 Architecture on the MNIST Dataset because it contains images of 28×28 size with written characters, which will aid in demonstrating the architecture’s proper implementation.

Conclusion

LeNet is a showcase of the potential that can be delivered through advancements in machine learning. Through the development of an ordered, and automated algorithm for image recognition, it would set the stage for a rich variety of artificial intelligence advances. Although more advanced models have subsequently been devised, the principles and approaches established with LeNet are as relevant today as they ever were. With the ongoing exploration of what AI is capable of doing and how to get there, the history of LeNet gives us a valuable lesson in the centrality of fundamental research and the lasting impact that fundamental work can have on the next generation of technologies.

1 thought on “The Origin of CNN – LeNet (CNN) architecture”