The Cornerstones of Modern AI

Transfer Learning and Fine Tuning in Deep learning has become a revolutionary impact in the growing world of artificial intelligence, changing a multitude of industries, from medicine to entertainment. Two, transfer learning and fine-tuning, have been the de facto standard for this problem, As they make use of it as part of the solution to achieve highly good but light models. In such methods, development durations are dramatically sped up and high quality outputs can now be obtained even without much informative data, or even with restricted computing power.

Understanding Transfer Learning in Deep Learning

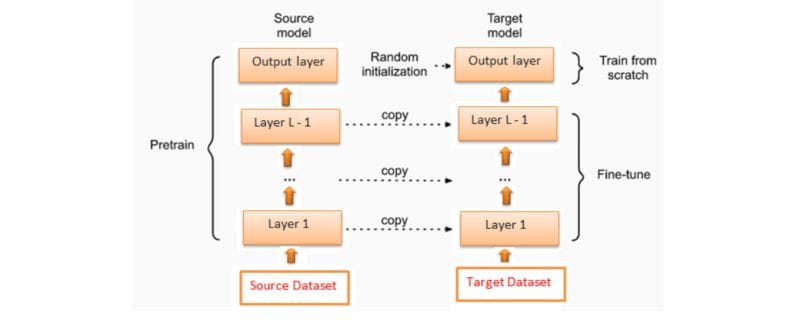

Transfer learning has been proposed as part of a humanizing approach of generalization from one domain to another to answer new problems. In deep learning, a pretrained model (i.e., a model trained on a huge dataset) is used to the task that is novel. The underlying idea about which these pre-trained models have acquired information-rich compounds that can be transferred to compute without de novo retraining is, in essence, that the task can be learned through transfer.

Transfer Learning and Fine Tuning in Deep learning – In particular, a pre-trained convolutional neural network (CNN) (e.g., ResNet) is trained on the ImageNet benchmark and learns features (e.g., edge position and texture position features) which can, in general, be adapted to an immense scale of computer vision tasks. On the basis above properties, transfer learning enables the engineer to train just the required task-dependent layers, and so a large reduction of the computational cost is realized.

The Role of Pre-Trained Models in Transfer Learning

Transfer Learning and Fine Tuning in Deep learning -Pre-trained models act as foundational blocks in transfer learning. Traditional examples of such models are trained on extremely large amounts of data together with a high computational power and are hence very proficient methods for a limited number of tasks. Notably, as illustrations, BERT for natural language processing (NLP) and VGGNet for image (re)recognition. There is some inherent global knowledge in these models that can be downsampled to create bespoke outputs, accordingly development time can be reduced and performance can be enhanced.

Transfer Learning and Fine Tuning in Deep learning – Transfer learning is one of the main benefits of working with limited data. Training of deep learning models from scratch is often the case, but rarely it can take advantage of the fact there is large amount of annotated data. As transfer learning eliminates this bottleneck by extracting sufficient information from a small data set, transfer learning has a promise of AI scalability and democratization.

Fine-Tuning: Enhancing Pre-Trained Models -Transfer Learning and Fine Tuning in Deep learning

Transfer Learning and Fine Tuning in Deep learning – Fine-tuning is a key part of transfer learning, i.e., how to fine-tune a pre-trained network to a specific task. Normally this requires fitting the model’s weights to a task using a task-dependent data set, but the general knowledge that is encoded in the model’s prior layer parameters is not adapted. Fine-tuning can be performed in different manners ranging from trivial fine-tuning procedures depending on the complexity of the new task and the size of the dataset to quite complex procedures where the remaining part of the original network is adopted in a very detailed way to the new task via number of fine-tuning steps.

Transfer Learning and Fine Tuning in Deep learning – If even small amount of newly available data is used, the practice is to freeze only the top layers of the model (i.e., trainable layers) [7] and train only the bottom layers. On the one hand, the end-to-end model is very flexible with respect to using a on-larger data set due to fine-tuning. By selectively perturbing fine-tuning of the model, one can at the same time maintain the knowledge of the model at a global level, but very heavily specialize it for the task in question.

Applications of Transfer Learning and Fine-Tuning -Transfer Learning and Fine Tuning in Deep learning

Transfer Learning and Fine Tuning in Deep learning – But by generalized use of methods for transfer learning and fine tuning, they have evolved into the new standard for any discipline. These techniques are also used in medicine (e.g., to model the decoding of medical images, i.e., X-radiographs and MRIs) for the aiding of disease diagnosis. Thanks to the availability of pre-trained models, clinicians are now able to develop clinically relevant diagnostic tools and assistants from minute, minimally labelled datasets.

Transfer Learning and Fine Tuning in Deep learning – Similarly to the role of natural language processing as a catalyst for progress, transfer learning has also been a significant driving force behind progress. For instance, the tasks e.g., sentiment analysis, machine translation, text summarization, have been transformed by models, e.g., GPT, BERT. However, business domain-specific AI-based solutions can be deployed by re-using general-purpose trained models’ models onto a domain-specific annotated text corpora (i.e., like.

Transfer Learning and Fine Tuning in Deep learning – In the field of autonomous vehicles, transfer learning is applied to the design of perception systems with object detection, lane detection, navigation in a maze type environment, etc. Car manufacturers can accelerate the rollout of autonomous-driving options and deliver the accuracy and robustness needed for applications between traditional deep-learning “upstream” levels using pretrained vision models.

Advantages of Transfer Learning and Fine-Tuning -Transfer Learning and Fine Tuning in Deep learning

Transfer Learning and Fine Tuning in Deep learning – The pratical effects of transfer learning and fine-tuning are not restricted to just technical benefit, etc. From an economic perspective such methods allow for substantial time and money saving when creating AI solutions. Reusability of existing models allows organisations to shorten the development cycle and improve its resource utilisation.

Transfer Learning and Fine Tuning in Deep learning – For example, in addition, transfer learning enables AI to be accessed by small organizations and/or individual investigators, which results in AI inclusiveness. State-of-the-art artificial intelligence (AI) models) in highly constrained organizations (organizations that are not heavy users of computer power and/or large amounts of data) to accomplish their objectives. There is great potential afforded by this availability of AI to spur innovation and aid the spread of best practices up and down the disciplines.

Challenges and Limitations

Transfer Learning and Fine Tuning in Deep learning – Despite the advantages that can be gained by transfer learning and fine-tuning, there are some limitations. On the other hand, one of the main drawbacks is the risk of overfitting, especially when the model is trained on a limited amount of data. Overfitting occurs when the model is reconciled too tightly to the training data and thus overfit to it so that it cannot generalize from it on new data. Methods (such as data augmentation, regularization, and optimal hyperparameter tuning) are frequently applied in an attempt to mitigate this effect.

Transfer Learning and Fine Tuning in Deep learning – Another challenge lies in selecting an appropriate pre-trained model. With such a wide variety of choices available, the power of selecting the right model for the intended task and the kind of data might be misleading. Moreover, the usability of the systems might not be appropriate if the pre-designed models are applied to the original systems to which further modification is needed.

Yet this picture is also enhanced by continuous research and development of transfer learning leading to higher accuracy and accessibility.

Best Practices for Implementing Transfer Learning and Fine-Tuning -Transfer Learning and Fine Tuning in Deep learning

Transfer Learning and Fine Tuning in Deep learning Makes sense pragmatically, in order to get to the optimal TL and fine-tuning, a rewarding practice should be kept. Given the fact that one of the most efficient actions that can be taken, is to select the right combination of a pre-trained model (which is in a rough/indirect way related to the target task) in order to perform the target task, picking a model as starting point to improve the performance towards the target task, i.e.,

requiring less training from scratch which is likely to be the more efficient way to achieve better performance, is a must to think of. Notwithstanding this fact, if and when it appears reasonable to apply a vision model for use in solving an image-derived task (i.e., or a NLP model for use in solving a character-derived task), the baseline is optimized.

Transfer Learning and Fine Tuning in Deep learning – To gain control over which finetuning strategy is best suited for the dataset and how to sample a finetuning strategy, the characteristics of the dataset and sample have to be used to inform the selection of finetuning strategy. Freeze part of the layers, or train them slowly (slower learning rate, etc. (to upper levels), is methodology for the modeling of a representative, iterative adaptation.

Fine-tuning, in principle, should not be performed without long-term life-long monitoring and quality checks for the model. Metrics (e.g., accuracy, precision, recall) can be used by engineers to identify flaws and iteratively settle upon the most successful solution to the flaw.

Real-World Case Studies in Transfer Learning

Transfer Learning and Fine Tuning in Deep learning – For example, in the battle (COVID-19), researchers applied the learning to interpret chest X-ray images and learn the pathology of the disease. Fine-tuning pre-trained models (e.g., DenseNet) using medical image datasets (those AI applications) may be able to provide rapid and accurate diagnosis that could help medicine systems under tremendous pressure.

Another compelling case is in e-commerce. Transfer learning based recommendation systems have changed the way businesses communicate with customers. Models previously trained and retrained on the information in a data set of user-behavior data can support system applications, such as Amazon or Netflix, in developing – more tailored, individualized recommendations that increase user engagement and satisfaction.

Transfer Learning and Fine Tuning in Deep learning In agriculture, transfer learning is aiding precision farming. Satellite imagery models, trained generically for nonspecific environmental applications, are adapted for crop health assessment, yield estimation, and resource use. This application is an example of how transfer learning can be used to solve global challenges by providing industry smart solutions.

The Role of Transfer Learning in Emerging Technologies

Transfer learning has become more and more embedded in the forefront technologies including the generative AI, and robotics. Generative AI consists of agents (e.g., StyleGAN, DALL-E) that use pre-trained neural networks to generate hyper-realistic images and artistic styles. Training such models to be specific to a domain of data is a promising area of art, design and media.

Transfer Learning and Fine Tuning in Deep learning – In robotics transfer learning extends the limits of generalization of machines for the task and environments that are different from the training set. Robot(s) with vision models scale-pre-trained at large scale from large corpora can be adaptively fine-tuned for e.g. Object recognition in, e.g., industrial production or care of the handicapped). It is this flexibility that truly enables an extension of robotic systems to a truly enormous number of applications.

The Future of Transfer Learning and Fine-Tuning

Transfer Learning and Fine Tuning in Deep learning As a byproduct of the continuing progress in artificial intelligence, transfer learning and fine-tuning will continue to be one of the most potent drivers in deep-learning developments. The ongoing research trend [e.g., federated learning, self-supervised learning] has been claimed to be made possible by the developed methods as a means to make more powerful and privacy-respecting AI for such applications

Also, hardware and software development are a driver for generalization and the democratization of transfer learning. As access to the cloud-hosted platform services and model repositories of general purpose pre-trained models becomes easier and easier, the users are increasingly impressed and finding that transferring learning as an option for their processing pipelines becomes plain and simple and opens possibilities for easier inter-disciplinary innovation.

Transfer learning and fine-tuning are exciting adversaries in the trenches of deep-learning communities. Due to the introduction of pre-trained models and the possibility to fine-tune for specific tasks, it is, in principle, feasible to create more robust and scalable AI agents that reconcile their limited capacity to learn from errors e.g., no data) with a limited computational power. As gratifying as the potential transformational impact of transfer and fine-tuning–innovations that will drive artificial intelligence (AI) to reach new heights – is the vast number of potential uses in this space, there is also.

Additional Insights into Transfer Learning and Fine-Tuning

Transfer Learning and Fine Tuning in Deep learning -The transfer learning manifestation of the extension effect is also evident in education and financial technology (fintech). In the educational field, the leading-edge NLP models are used as a system which allows the selection of ad-hoc educational resources in case of the student’s performance. On ground floor, and with the support of tailor-trained models, which can be fine-tuned according to the different curricula or the different student cohorts there are, one can achieve a very high achievement of learning at a very low cost.

In fintech, systems for the detection of fraud are based on transfer learning in order to recognize anomolous patterns within transaction data. Fraud detection utilizing para-trained models, trained using finanical data, can lead to efficiency improvement of the detection of fraud as well as early detection with a better chance of reduced financial loss. These applications illustrate the power of transfer learning for addressing a broad spectrum of problems across many different domains.

Bridging Research and Industry

Transfer learning and fine-tuning have been applied to the largest possible degree, mainly in industry but also academic research, and have become a dominant force. Besides speeding up the process of hypothesis testing, researchers also use transfer learning not only to speed up hypothesis testing, but also to explore unknown scientific fields. Here, i.e., (For) climate researchers are using pre-trained models with environmental variables, including the ability to incorporate trends and more accurately predict the direction of change.

Transfer Learning and Fine Tuning in Deep learning – Moreover, interdisciplinary collaboration between academia and industry fosters innovation. Open-source frameworks such as TensorFlow and PyTorch provide model repositories and models are being pushed to research and development teams to experiment, adapt, and share. Thanks to the extension of livin tissue and work based research with one industrial application process biomedical engineering technologies have proceeded the pace of future development to a heretofore unprecedentedly high level across the globe.

Ethical Considerations in Transfer Learning

Transfer Learning and Fine Tuning in Deep learning – Ethical questions also remain of particular concern in the context of transfer learning/fine-tuning, as with any technology. Still, issues of bias, fairness and transparency need to be solved in order to make sure that the impact of AI systems is positive for society and does not destroy it. Yet, because they are trained on publicly available information, pre-trained models may be implicitly biased with societal bias that is present in the data. Fine-tuning towards more representative, more balanced, and more representative data can, however, mitigate these inclusive biases.

Transfer Learning and Fine Tuning in Deep learning – Transparency in model development and deployment is equally important. Eventually, via interactivity around the constraints and the risk associated with AI systems, the user can be better equipped to work with the systems, but also it will continue the responsibility for the developers side. As the use of transfer learning-based AI for faces becomes increasingly widespread, ethical issues should be addressed in order to foster trust and sustained viability.

Preparing for the Future of AI -Transfer Learning and Fine Tuning in Deep learning –

In the future, the hybridization of transfer learning and the emerging field of quantum computing with edge AI is sure to be very exciting. Nonparametric model optimization using quantum computing is a significant potential to enhance the performance of pre-trained models by accelerating the computations and opening new realms of problem-solving ability. In addition, edge artificial intelligence (edge AI), i.e., local execution of an AI model at an edge device instead of a central server, is based on transfer learning, targeting to offer a performance and low latency as acceptable as possible for real world applications – one-a-day.

Educational programmer will play a key leadership role, one of the key activities for educating the next generation of AI practitioners on how to achieve beneficial utility of transudative ML by use of transfer learning. Through promoting active engagement and providing for a culture of lifelong learning, the interdisciplinary skills perspective and the opportunities that it brings, the advantages of transfer learning and fine-tuning can be obtained, and ultimately the innovation and development activity can be enhanced at global scale.

3 thoughts on “Transfer Learning and Fine Tuning in Deep Learning”